Sentence generation without transformers

Tuesday, August 15, 2023

Generative AI, such as ChatGPT, is all the rage these days. Transformer-based ones like BERT and ChatGPT can generate convincing text which sounds very human-like and authoritative. (Even if the model is lying through its teeth.)

That said, if we just want to generate text, we don’t have to rely on deep learning to do it. Rudimentary approaches like pre-computing token-to-token transition probabilities can go a long way. No need for word embeddings, no need for neural networks. In this article, I’ll talk through such an approach. 1

Where it started: predicting the next likely word

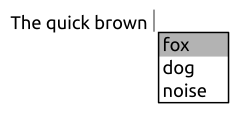

If I recall correctly, I was thinking about a way to “predict the next word” in the context of assisting text input. It was the late 2000s, so it would have been most applicable for text input on phones (which already existed at the time), but I was thinking about a use-case on the desktop.

Around that time, Stack Overflow started providing periodic data dumps which was a good source of getting human-written text and some code snippets. Also around that time, Google was providing n-gram data from books (and other printed texts), which also could have been a good data source.

For this attempt the Stack Overflow data set was used which generated sentences and (sometimes) code snippets… kinda sorta. 😜 So in a sense, a Github Copilot a decade earlier, but with a much, much, much lower efficacy.

I didn’t pursue this any further and can’t even find the code that was used at the time. But I recall the process was along the line of:

- Take the dataset (such as a Stack Overflow question or answer), break it down into individual articles (i.e., questions, answers.)

- Take the article text, remove the punctuation and tokenize on whitespace.

- For each token, calculate the probability of transitioning to another particular token.

From there, we get the token-to-token transition probabilities. Predicting the next word2 given the current one is a trivial task.

The natural next step was to attempt predicting further than just the next word, in essence, to write an answer or code on its own. Expressed in pseudocode, it would be something like this:

# A map containing probabilities of the next token for a given token.

probabilities: map<string, map<string, double>> = load_probabilities()

chosen_tokens = []

current_token = get_first_token()

while keep_going(chosen_tokens):

next_token = pick_highest_freq_token(probabilities[current_token])

chosen_tokens.add(next_token)

current_token = next_token

sentence = ' '.join(chosen_tokens)

In essence, it’s what we see today with ChatGPT and Github Copilot, just using very rudimentary methods, which didn’t work very well.

There’s a couple of problems with this approach:

First, the context of text generation is only a single token deep. Hence, generated text seems like a person that keep track of what they’re talking about.

Second, there’s no good way to stop generating text. Given that the original purpose was just to predict the next word and have a human choose whether it was a good choice, ending a sentence was not a consideration.

Refining the idea

Recently, I’ve revisited the concept with a couple of changes3:

- Preserving punctuation as tokens.

- Use not only bigrams but also trigrams for predicting the next token.

The example of outputs shown below are created from transition probabilities calculated from approximately 21,000 articles in the English Wikipedia dump.4 Hence, it’s going to have some bias to the contents from those articles. (That said, it appears to have a fairly broad number of topics covered from politics to computers.)

Preserving punctuation as tokens

Previously, by stripping out punctuation, sentences were missing commas and worst of all, there was no logical ending to text being generated. With punctuations preserved as tokens, the text generation can logically end: with a period. Another nice part is that sentences end up with commas to end clauses, and they tend to end up in the right place – just by using probabilities!

Here, we see a sentence flowing “naturally”, albeit the content being utter gibberish:

New York city of the North American singer-songwriter and in the same time, and other than a major role in the end of the largest city, which is a long as a.

Use not only bigrams but also trigrams for predicting the next token

Another improvement is to not only use bigrams but also trigrams for predicting the next token. (Trigrams are used to find the transition probability as a function of two prior tokens.)

With a bigram, the number of times a particular bigram (e.g., “the end”) appears can be used to calculate the transition probability from the first token to the next. In this example, the probability for “end” to appear after “the” will be calculated and used when the sentence is generated.

Similarly, in the case of using a trigram, the number of times a trigram (e.g., “president thomas jefferson”) appears is used to calculate the transition probability from the first two tokens to the next. The probability of “jefferson” following the tokens “president thomas” will be used when generating the sentence.

As seen above, using more tokens as the prior condition it allows extending the context to more than using just the previous token. Therefore, it tends to create sentences that keep track of the conversation a little better.

For example, the word that comes after the words wall street is journal:

Wall Street Journal of the other ..

As a counterexample, with just street, the next word won’t have journal, demonstrating the usefulness of keeping track of more tokens:

Street, and politician, American baseball player and a few months.

More examples

Let’s look at a few sentences generated with this approach:

- The World, in addition to its own.

- The War, with an “/> this is a large part of the country.

- The National League of two major cities, but also the US and are more than any of an important part in the West Bank, as well.

- The city ..

- The “, but it was also a variety of the second largest city, which is not in the late 19th century, with an attempt to the term “(d. 2019), but not a high Commission in the country, as well.

- The end of a group, which was published in the first of them to as a New York: 0 “/> the last few months later.

- The country, but it is an American baseball player and in the Battle of an embassy to a long, which is also be made in the “, but is used to use of the country.

- The Battle of an individual, “(b. C. AD) and other.

- The North and their first in September 11, which was an English footballer and the use of this is used in a common.

- The University of state, and that “(the World War II, in their “() and other hand, 000 years.

It doesn’t quite resemble sentences written by a real human being. 😅

There’s a few issues that we can see –

First, there are fragments of markup (e.g., "/>) that’s left in the text.

This is because the data from the Wikipedia dumps isn’t processed well enough to remove such markup.

Second, there’s quotation marks that are sprayed in the text as well. Since the sentence generation doesn’t really take into account opening/closing these punctuation marks, we end up with these kinds of oddities.

Finally, there’s a tendency for similar sequence of words to show up. Some randomness is included in the sentence generation, but for word sequences that don’t occur often, or occur very often, we can see these biases.

There’s definitely room for improvement!

The code

In essence, the approach can be summarized in pseudocode like this:

# Part 1: Find the transition probabilities from source data (e.g., wikipedia dumps)

bigram_counts: map<pair<string, string>, int> = init_map();

trigram_counts: map<triple<string, string, string>, int> = init_map();

while next_article() as article:

text = article.get_text()

tokens = split_text_into_tokens(text)

last_n_tokens: ring_buffer<string> = ring_buffer(capacity: 8)

for token in tokens:

bigram_counts = bigram_counts[get_last_two_tokens(last_n_tokens)]++

trigram_counts = trigram_counts[get_last_three_tokens(last_n_tokens)]++

# Stop at an end of the sentence.

if token == '.':

last_n_tokens.clear()

break

# The value of the map is a pair whose:

# - First value represents the previous 1 or 2 tokens, and

# - Second value represents the next token and the associated probability.

prob_from_prev_1_token: map<string, pair<string, double>> = bigram_count_to_probabilities(bigram_counts);

prob_from_prev_2_tokens: map<pair<string, string>, pair<string, double>> = trigram_count_to_probabilities(trigram_counts);

# Part 2: Sentence generation

first_word: string = get_input_from_user()

chosen_tokens: list<string> = [first_word]

previous_tokens: ring_buffer<string> = ring_buffer(capacity: 8)

previous_tokens.add(first_word)

while true:

next_token_candidates: list<pair<string, double>> = []

add_token_probabilities(next_token_candidates, prob_from_prev_1_token[get_last_1_token(previous_tokens)])

add_token_probabilities(next_token_candidates, prob_from_prev_2_token[get_last_2_token(previous_tokens)], weight=DIFFERENT_WEIGHT)

# Prevent a token from being chosen as next one if it appears recently.

next_token_candidates.filter(candidate -> !previous_tokens.contains(candidate.first))

next_token: string = choose_next_token(next_token_candidates)

chosen_tokens.add(next_token)

previous_tokens.add(next_token)

if next_token == '.'

break

# Assemble the sentence.

sentence = assemble_sentence(chosen_tokens)

print(sentence)

Note that the above:

- Ignores cases like not enough tokens in

last_n_tokensto get bigrams and trigrams. In reality, such checks are required, but are omitted for clarity. - The

split_text_into_tokensfunction contains a bit of heuristics to split text into what’s considered “good” tokens in our case. In particular, it keeps punctuation like commas, quotation marks and periods as individual tokens. This is important later, as a period ('.') is used as to end the sentence generation. It also include special handling for acronyms (and some abbreviations) to prevent premature sentence termination due to periods used in them. - The

choose_next_tokenfunction will choose from the likely candidates based on their probabilities, along with some randomization. Randomization prevents going into a loop of same sequence of tokens being chosen. - The

assemble_sentencefunction is essentially a string join on spaces except for punctuation which shouldn’t have spaces.

What next?

I’ll put the code up on Github once it’s in a more presentable form. It’s just a toy, so nothing too exciting, but might as well make it available if anyone wants to play with it. 😉

Final thoughts

Looking back, coming up with the initial algorithm is actually the easy part. It’s the fine-tuning necessary to improve the quality, both in the algorithm and in the processing of source data.

Creating “clean” text from source data is very hard – there can be many problems that can affect the final output. Especially text with markup can be a real pain to work with, especially when different types of markup is used throughout the source data. In that respect, using plain text sources like novels (such as those available on Project Gutenberg) tend to have relatively clean text.

-

And to be specific, this article will stick to an approach that’s applicable to English text. ↩

-

I use the term word and token interchangeably through this article, although they’re technically not the same as I consider punctuation and acronyms as tokens as well. ↩

-

After a bit of research, it turns out that this approach is essentially a variant of the word n-gram language model. ↩

-

In this article, a portion of the English Wikipedia dump from July 1st, 2023 (

enwiki-20230701-pages-articles-multistream1.xml-p1p41242.bz2) was used. ↩